Introduction

Recently, I purchased the SK-TDA4VM development kit and all that I have done with it, so far, is to conduct a preliminary investigation into powering the kit, using a Raspberry Pi's USB Type-C power supply. Now, for a development kit that cost over a hundred quid its not good enough, as by now one would expect the board to start earning its keep along side its competitors in my machine learning armoury (Google's Coral Mini, Nvidia's Jetson Nano, Xilinx's Ultra96v2, Intel's CYC1000, Lattice Semiconductor's ECP5UM-45F-VERSA, etc).

The delay in not getting started with this kit is not unjustifiable however and is for a good reason. It is because I have been developing a wireless data logger to monitor the power consumption of the SK-TDA4VM in real-time. The kit has a recommended power supply of 65W, much more than the 18W of maximum power available from the RPi4 compatible supply that's currently being used. Hence, by monitoring and logging the power I should have an idea, during deployment, of the peripherals that I can and can't use. I'll post an article on the project, when it's complete.

Before I go on to discuss the SK-TDA4VM's workflow a quick precise on EdgeAI is in order.

SK-TDA4VM on the Artificial Intelligence Edge

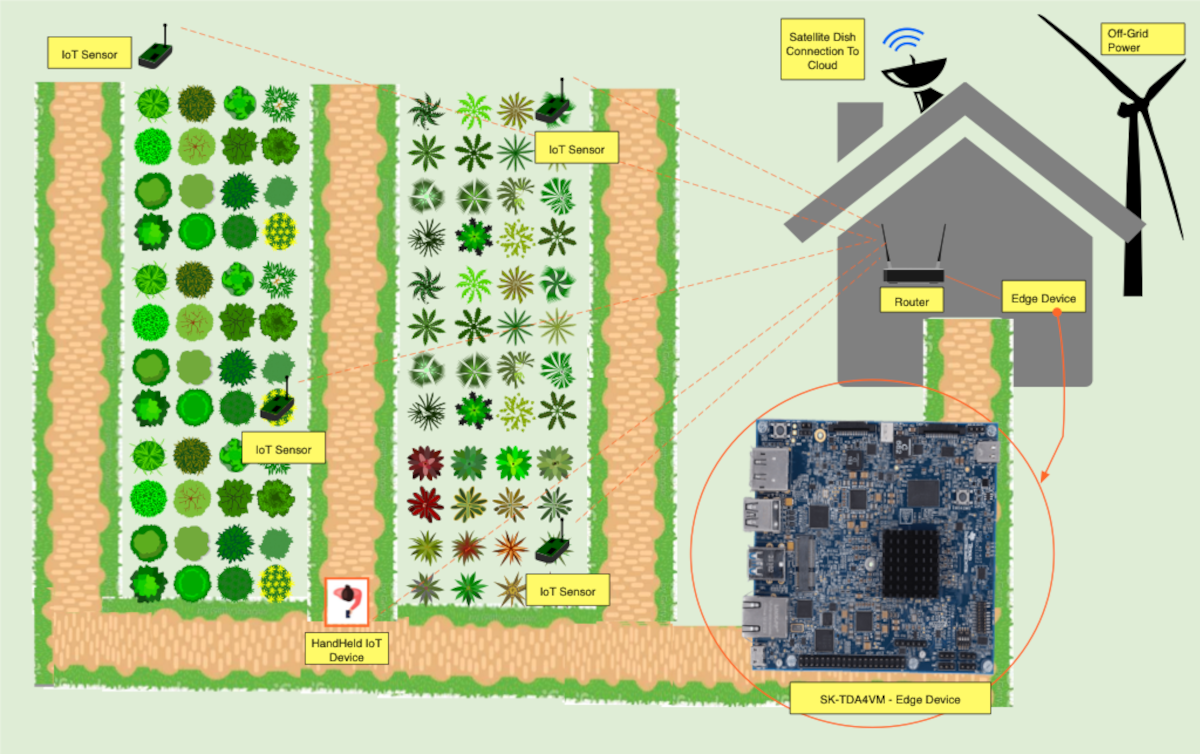

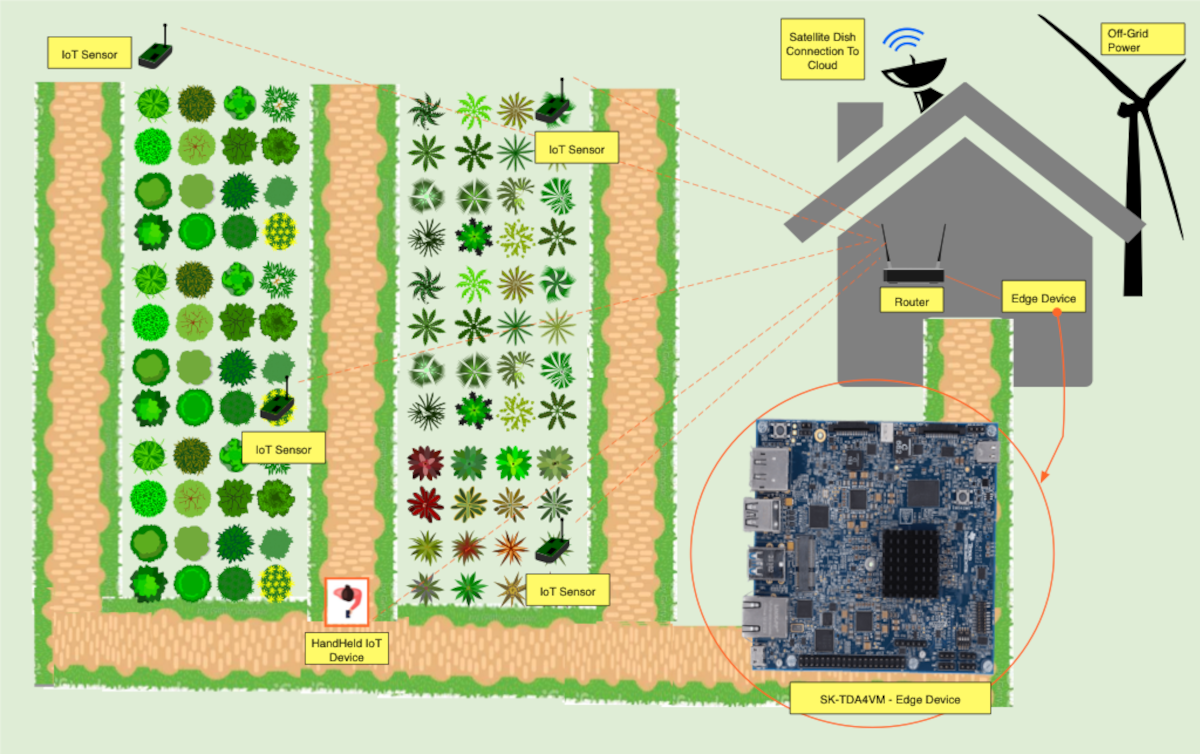

There are several definitions of EdgeAI, which all tend to differ slightly in meaning. However, the definition that suits my use case is for the SK-TDA4VM to be used, as an EdgeAI device that offloads machine learning inferencing from the Cloud. That is, the starter kit is used, as a localised cloud server, a halfway house that sits, between the Cloud and IoT devices. Hence, machine learning inferencing is neither performed in the Cloud nor on small footprint IoT devices, using for example TinyML. Instead, inferencing takes place on the SK-TDA4VM with the individual results relayed back to IoT devices. Likewise, aggregate results are sent to the Cloud, if required.

This provides the SK-TDA4VM EdgeAI device, which offers 8 Trillion Operation per Second (8 TOPS), several advantages over traditional Cloud based inferencing services, including:

- Reduced latency: EdgeAI devices remove the requirement of data transmission, between the Cloud and IoT devices. This reduces the data processing latency of slow data links.

- Reduced Network Costs: Implementing a localised EdgeAI server reduces the bandwidth cost requirements associated with maintaining a remote network connection.

- Improved Data Security: EdgeAI devices store information locally, making them more secure and the data less prone to snooping. An EdgeAI device may even be disconnected from a Wide Area Network (WAN), offering fewer opportunities to cyber attackers.

- Reliability: When data processing on the edge is distributed among several EdgeAI devices collectively they make a system more reliable. Individual device failure may not cause the whole system to fail, a required measure for critical IoT systems.

In this hypothetical example, shown in the image on the above, the SK-TDA4VM starter kit is used, as an artificial intelligence edge device, on an intelligent remote farming project. IoT sensors that are dotted around the farm send their machine inferencing tasks to the starter kit for processing. The tasks could include leaf image classification for early plant disease detection, soil and temperature measurements for predictive germination of seeds or even agricultural equipment data for preventive maintenance analysis.

The kit offers many interesting possibilities and when used off-grid it could even be powered from a combination of wind (or hydro) power and lithium battery storage systems. Also, a satellite link could be used to provide WAN services, when required.

SK-TDA4VM Inferencing Workflow

So, the potential of this kit is quite exciting and although the data logger project is still work in progress I thought it would be a good idea to start inferencing work on the kit. To do so it is required to understand Texas Instrument's (TI's) EdgeAI workflow. One good way to do this is to align TI's "Hello World" tutorial with the beginner's Basic classification: Classify images of clothing tutorial found on the TensorFlow.org website. This tutorial demonstrates classifying clothing, using the Fashion MNIST dataset.

Even a poor plan is better than no plan at all - Mikhail Chigorin

To begin machine learning on the SK-TDA4VM I plan to implement the action items listed in the Table, below.

| Item | Action | Corresponding Article |

|---|---|---|

| 1 | Run the Basic Classification: Classify images of clothing tutorial on the Mac Mini M1. | Machine Learning Basic Classification (1): Classifying Clothing on the Mac Mini M1 |

| 2 | Convert the Tensorflow model, used in the tutorial, into a TensorFlow Lite Model. | Machine Learning Basic Classification (2): TensorFlow Lite Model Converter |

| 3 | Run the Basic classification tutorial on TI's Edge AI (Jacinto or TDA4VM) cloud. | Machine Learning Basic Classification (3): Machine Learning on TI's EdgeAI Cloud |

| Compile a Tensorflow Lite Model for inference on the AI Edge | Interlude: TensorFlow Models on the Edge | |

| 4 | Run it on the TI Edge cloud Tool with deep learning acceleration. | Machine Learning Basic Classification (4): EdgeAI Cloud - Jacinto ML Hardware Acceleration |

| 5 | Perform ML inferencing remotely using a Jupyter Notebook or Tensorflow.js. | (5): SK-TDA4VM: Remote Login, Jupyter Notebook and Tensorflow.js |

| 6 | EdgeAI Machine Learning Series | |

Conclusion

In this article I have briefly summarised the EdgeAI use case of the SK-TDA4VM and presented an example of how it could be used, as part of an intelligent farming project. Although, work is being carried out on a wireless data logger to monitor the power consumption of the kit, inferencing will be commenced, by using a combination of a Mac Mini M1, TI's EdgeAI Cloud and the SK-TDA4VM Starter Kit, itself.

Well, that all for now, folks! The results of each of the steps listed, above, will be presented in future articles. So stay tuned.

Follow-Up Articles

Articles in this Series

Preparatory Steps

Basic Classification (1/4) - Classifying Clothing on the Mac Mini M1

Basic Classification (2/4) - Tensorflow Lite Model Conversion

Basic Classification (3/4) - Machine Learning on TI's EdgeAI Cloud

Basic Classification (4/4) - EdgeAI Cloud - Jacinto ML Hardware Acceleration

Classification on the AI Edge

(5) SK-TDA4VM:Remote Login, Jupyter Notebook and Tensorflow.js

(6) SK-TDA4VM: An Improved Fashion Classification DNN

(7) - Category List

References